‘Fox guarding the henhouse’: AMA, Vaccine Integrity Project to conduct their own vaccine safety and efficacy reviews

By Suzanne Burdick, Ph.D. | The Defender | February 11, 2026

The American Medical Association (AMA) is teaming up with the Vaccine Integrity Project to conduct its own review of vaccine safety and efficacy, claiming that advisers to the Centers for Disease Control and Prevention (CDC) are no longer doing a good enough job.

The groups said Wednesday in a press release that “for decades,” the CDC’s Advisory Committee on Immunization Practices (ACIP) had “served as the engine of evidence-based vaccine policy” for the U.S. “That system has now effectively collapsed.”

U.S. Department of Health and Human Services (HHS) Press Secretary Emily G. Hilliard told The Defender the claim that ACIP’s evidence-based process has collapsed is “categorically false.” She said:

“ACIP continues to remain the nation’s advisory body for vaccine use recommendations driven by gold standard science. While outside organizations continue to conduct their own analyses and confuse the American people, those efforts do not replace or supersede the federal process that continues to guide vaccine policy in the United States.”

The Vaccine Integrity Project, based at the University of Minnesota’s Center for Infectious Disease Research and Policy (CIDRAP), says it is dedicated to “safeguarding vaccine use in the U.S.”

The AMA will work with the project to review vaccines for the 2026-2027 respiratory virus season. These include immunizations against COVID-19, influenza and respiratory syncytial virus (RSV), according to the press release.

CIDRAP Director Michael Osterholm said in a statement that the goal is “to restore peace of mind for clinicians and patients by ensuring that experts are continuously evaluating vaccine safety and effectiveness using transparent, evidence-based methods.”

Children’s Health Defense (CHD) General Counsel Kim Mack Rosenberg said it’s unlikely that the groups will restore people’s peace of mind about vaccines. She said:

“Unfortunately, the AMA and the Vaccine Integrity Project support a narrative about vaccines that is being exposed more and more as problematic and contradicted by what people are seeing with their own eyes.

“The system is broken and efforts to prop it up from the inside are being exposed for conflicts of interest and flawed analyses.”

The groups’ review process looks similar to how the ACIP traditionally worked, but they won’t issue recommendations. Instead, they will share their review results with medical societies, which can write recommendations for their patient demographic.

The AMA and the Vaccine Integrity Project said they will also involve medical societies and public health and healthcare organizations to craft policy questions.

Review members will disclose “relevant” conflicts of interest, according to the press release. However, “relevant” was left undefined.

The AMA and Vaccine Integrity Project said in a statement:

“The goal of this work is to ensure a deliberative, evidence-driven approach to produce the data necessary to understand the risks and benefits of vaccine policy decisions for all populations — the approach traditionally used by the federal government.”

The effort may generate more confusion among Americans who are torn between looking to the federal government or medical societies for vaccine guidance, according to Trial Site News.

“The country is no longer operating with a single, uncontested center of vaccine-policy gravity,” Trial Site News wrote.

‘Like asking the fox to guard the henhouse’

The Vaccine Integrity Project, launched in April 2025, is funded by an unrestricted gift from iAlumbra, a nonprofit founded by Walmart heiress and philanthropist Christy Walton.

The Robert Wood Johnson Foundation, The Greenwall Foundation and Lasker Foundation are also listed among the project’s funders.

The Vaccine Integrity Project declined The Defender’s request for a list of donation amounts and names of any individual donors.

Former CDC Director Rochelle Walensky serves as the Vaccine Integrity Project’s adviser of medical affairs. In 2022, Walensky admitted the CDC gave false information about COVID-19 vaccine safety monitoring.

Already, the Vaccine Integrity Project released a review of the hepatitis B vaccine that supported vaccinating all newborns at birth, rather than delaying when the mother has tested negative for hepatitis B. The project is currently reviewing the human papillomavirus (HPV) vaccine.

“Trusting the AMA and the Vaccine Integrity Project to objectively review vaccine safety feels a lot like asking the fox to guard the henhouse,” said Nebraska chiropractor Ben Tapper.

Mack Rosenberg said the repeated failures of such organizations to “truly and comprehensively” analyze vaccine safety data have led to “increasing distrust among the public — and with good reason.”

AMA ‘a political force,’ not a ‘neutral medical association’

In 2025, the AMA spent nearly $24 million on lobbying, making it one of the top 10 groups trying to influence government policy, according to OpenSecrets.

“This is not the behavior of a neutral medical association. It is the strategy of a political force,” wrote Jason Altmire in an op-ed for RealClearHealth.

Altmire, a former hospital and health insurance executive who served in the U.S. House of Representatives, is an adjunct professor of healthcare management at the Texas Tech University Health Sciences Center.

Tapper questioned whether the AMA and the Vaccine Integrity Project would sufficiently assess the safety of vaccines.

For many people, the concern isn’t that vaccines can have benefits, he said. “The concern is whether safety data is fully transparent, whether adverse event reporting is thoroughly investigated, whether conflicts of interest are disclosed and whether risk-benefit analyses are stratified appropriately by age and health status.”

The AMA, which touted 2024 revenues of $546 million, was criticized during the COVID-19 pandemic for deferring to political ideology rather than medical facts.

Its “AMA COVID-19 Guide: Background/Messaging on Vaccines, Vaccine Clinical Trials & Combatting Vaccine Misinformation” encouraged doctors to use certain words and avoid others. For instance, “stay-at-home order” replaced “lockdown,” and “deaths” replaced “hospitalization rates.”

The AMA in August 2025 was disinvited from the CDC’s vaccine advisory committee’s workgroups.

This article was originally published by The Defender — Children’s Health Defense’s News & Views Website under Creative Commons license CC BY-NC-ND 4.0. Please consider subscribing to The Defender or donating to Children’s Health Defense.

Epstein Pitched JPMorgan Chase on Plan to Get Bill Gates ‘More Money for Vaccines’

By Michael Nevradakis, Ph.D. | The Defender | February 10, 2026

In the years leading up to the COVID-19 pandemic, Bill Gates and key figures from the Gates Foundation regularly interacted with Jeffrey Epstein, discussing ways to finance and develop a global pandemic preparedness and vaccination network.

The communications between Gates and Epstein were included in the “Epstein Files” released Jan. 30 by the U.S. Department of Justice (DOJ). Last year’s passage of the bipartisan Epstein Files Transparency Act prompted the release.

Sayer Ji told The Defender the files show that Epstein “functioned as a switchboard” connecting “hedge funds, central banks, billionaires, academic institutions and global health initiatives.”

Ji published his analysis of health- and medical-related information in the files in a series of Substack articles and posts on X.

Seamus Bruner, director of research at the Government Accountability Institute, said the files revealed the workings of a network of “Controligarchs on steroids, but with shocking new receipts.”

Bruner said the files showed that Epstein helped develop “the architecture for pandemic profiteering” years before the COVID-19 pandemic.

The documents largely date from the 2010s — after Epstein’s 2008 conviction for soliciting underage sex and his inclusion on a registry of sex offenders.

Ji noted that months before the start of the COVID-19 pandemic, many of the same actors who appear in the Epstein files participated in Event 201 — a simulation of a global pandemic caused by a coronavirus.

The pandemic preparedness infrastructure built in the years before the pandemic helped lead to this simulation, Ji wrote.

According to The Hill, members of the U.S. Congress began reviewing unredacted versions of the documents on Monday.

Rep. Thomas Massie (R-Ky.), who co-sponsored the Epstein Files Transparency Act along with Rep. Ro Khanna (D-Calif.), told The Defender the documents’ release is about justice, not politics.

“Rep. Ro Khanna and I have tried to keep the Epstein files from being political. The Democrats want to make it about Trump, and the Republicans want to make it about the Clintons. We want to make it about the survivors and getting them justice and transparency,” Massie said.

Gates, Epstein and the ‘architecture behind pandemics as a business model’

Ji’s series of Substack posts revealed what he described as “a 20-year architecture behind pandemics as a business model — with Bill Gates at the center of the network,” along with multinational financial institutions like JPMorgan Chase.

The documents, dating from 2011 to 2019, illustrate an “architecture whose foundations predate the COVID-19 era by more than a decade,” Ji wrote. He said they constitute evidence of “a major Wall Street bank asking a convicted sex offender to define the architecture of a Gates-linked charitable fund.”

The documents included several emails outlining the development of a Gates-led charitable fund. A Feb. 17, 2011, email from JPMorgan Chase’s Juliet Pullis to Epstein included questions from the “team that is putting together some ideas for Gates.”

Epstein’s reply outlined how this fund could be structured. The proposal would be developed further in the following months.

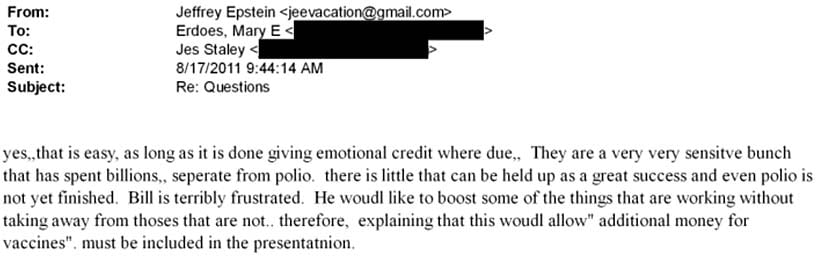

In a July 26, 2011, email from Epstein to JPMorgan Chase executive Jes Staley, on which Boris Nikolic, Gates’ chief science and technology adviser, was copied, described a “silo based proposal that will get bill [Gates] more money for vaccines.”

By Aug. 17, 2011, Staley and Mary Erdoes, then-CEO of JPMorgan Asset and Wealth Management, were discussing more details of the proposed fund, including developing “an offshore arm — especailly for vaccines” and projecting “billions of dollars” in donations within two years.

In a response later that day, Epstein said Gates was “terribly frustrated” at the slow pace of establishing the fund. He said Gates was insistent that “additional money for vaccines” be included in an upcoming presentation about the fund.

By Aug. 31, 2011, JPMorgan Chase had apparently developed a proposal called “Project Molecule,” where the bank would partner with the Gates Foundation to develop a perpetual charitable fund for pandemic preparedness and surveillance, vaccine promotion and disease eradication.

According to Ji, the proposal contains many of the ideas Epstein had previously discussed with JPMorgan Chase executives. It also contained plans to spend millions of dollars to purchase oral polio vaccines for Afghanistan and Pakistan, a rotavirus vaccine for Latin America, and a meningitis vaccine for Africa.

The proposal suggested that Melinda Gates chair the fund’s strategic program/grant and distribution committee and that Erdoes, Warren Buffett, Jordan’s Queen Rania and Seth Berkley, CEO of Gavi, the Vaccine Alliance, also participate. The Gates Foundation funded Gavi’s launch in 1999 and holds a permanent seat on its board.

Ji wrote that while Epstein’s name does not appear in the Project Molecule proposal, it acts as the “institutional translation of the architecture he was sketching informally.”

By 2013, these efforts appear to have led to the launch of the Global Health Investment Fund. A confidential Sept. 23, 2013, briefing described the fund as “the first investment fund focused on global health drug and vaccine development.” The fund promised investors annual returns of 5%-7%.

Among the attendees at the fund’s September 2013 launch were JPMorgan Chase CEO Jamie Dimon and representatives of Pfizer, Merck and GlaxoSmithKline (now GSK).

Gates could ‘work with anyone on earth’ but ‘chose a registered sex offender’

According to Ji, Nikolic’s involvement is significant. In August 2013, Gates and Epstein signed an agreement, in which Gates “specifically requested” that Epstein “personally serve” as Nikolic’s representative. The letter noted Epstein’s “existing collegial relationship” with Gates.

“This agreement was executed five years after Epstein’s conviction for soliciting a minor for prostitution,” Ji wrote. “Gates had the resources to work with anyone on earth. He chose a registered sex offender — and put it in writing.”

The documents showed that a month earlier — on July 18, 2013 — Epstein authored a draft email apparently intended for Gates. It references Epstein’s friendship with Gates, his disappointment that Gates sent him an “unfriendly strongly worded email,” and referenced sordid communications the two apparently previously shared.

“TO add insult to the injury you them implore me to please delete the emails regarding your std, your request that I provide you antibiotics that you can surreptitiously give to Melinda and the description of your penis,” Epstein wrote.

In a video posted on X, Michael Kane, director of advocacy for Children’s Health Defense, said that while it’s unknown whether Epstein ever sent that email to Gates, “the next month they’re in a contract together.”

“I think Bill Gates got the message,” Kane said.

In November 2023, a federal judge approved a $290 million settlement between JPMorgan Chase and over 100 women who accused Epstein of sexual abuse. The women alleged that JPMorgan Chase continued doing business with Epstein despite internal warnings over a span of several years.

“JPMorgan banked Epstein for years despite clear red flags — over $1 billion in suspicious transactions flagged internally and ignored. They knew. They didn’t care,” wrote The Truth About Cancer.

Did Epstein play role in launch of the ‘biosecurity state’?

According to Ji, the documents provide a roadmap for how a pandemic preparedness infrastructure was developed and how it helped make Event 201 possible.

“By the time Event 201 convened, the architecture … was no longer conceptual. It had been funded, structured, bonded, insured, staffed, and legally papered. What remained was the rehearsal,” Ji wrote.

September 2014 documents show that Gates disclosed his upcoming meeting with President Obama to Epstein, just as an adviser to then-Israeli Prime Minister Ehud Barak sent Epstein an invitation to a private, off-the-record reception with Obama the following month.

Ji said the communications occurred during “the week Ebola was formally reclassified as a threat to international peace and security.” He said the timing is significant, as this “was the week the biosecurity state was born.”

According to Ji, these developments helped activate the infrastructure outlined in Project Molecule, where Epstein acted as a node for Ebola-related project proposals.

This included Epstein receiving a United Nations (U.N.) diplomat’s proposal for the development of a “Nexus Centre for peace and health” that would take “into account the serious impact of Ebola,” and a proposal by a group of scientists for a pre-symptomatic Ebola detection system using PCR testing.

The scientists behind the proposal — affiliated with a U.S. military biolab at Fort Detrick, the Centers for Disease Control and Prevention and the National Institutes of Health — asked Epstein to send the proposal to Gates and the Gates Foundation.

By October 2014, Epstein was warning Kathy Ruemmler, then White House counsel to Obama, of the political cost if Obama didn’t take action on Ebola. By 2015, Epstein was acting as an intermediary in efforts to convene global experts who would “discuss how we can most effectively address and prevent pandemics.”

The proposal, by the International Peace Institute’s Terje Rød-Larsen, led to the convening of a May 2015 closed-door meeting in Geneva, Switzerland, titled “Preparing for Pandemics: Lessons Learned for More Effective Responses.” The World Health Organization (WHO), World Bank and U.N. were involved with the meeting.

The meeting’s agenda included sessions addressing “how pandemics should be anticipated, how authority should be exercised, how multiple stakeholders should be coordinated, and — critically — what legal, institutional, and financial mechanisms must be put in place in advance to enable rapid, centralized response,” Ji wrote.

According to Ji, the COVID-19 pandemic response has its roots in the 2014 Ebola response, as Ebola “was the first disease to formally justify the suspension of normal political and sovereign constraints on a global scale. … When the next global health emergency arrived — COVID-19 — the playbook was already written.”

“Epstein appears in the background of precisely these formative conversations — serving as a connector between global finance, philanthropic capital, and biological risk governance,” Ji told The Defender.

Epstein involved in ‘strain pandemic simulation’ two years before COVID

By 2017, these conversations led to proposals for pandemic simulations.

In a January 2017 iMessage thread between Epstein and an unidentified physician seeking help in finding a new job, the physician cited “expertise with public health security.”

The physician, who had experience at the U.N., WHO, Gates Foundation and World Bank, said he “just did pandemic simulation,” which could become a “big platform.”

Referring to Gates, the physician told Epstein, “He hates mental health but he’s crazy about vaccines and autism stuff. That could be start to a more broad conversation.”

A March 2017 email chain, which included Epstein and Gates, discussed efforts by the then-bgC3, Gates’ private strategic office, to develop “Follow-up recommendations and/or technical specifications for strain pandemic simulation.”

Ji noted that in 2017, the Coalition for Epidemic Preparedness Innovations (CEPI) was launched at the World Economic Forum (WEF), with Gates Foundation funding and a goal of creating “pandemic-busting vaccines” within 100 days. Later that year, the World Bank issued the first-ever pandemic bonds.

Event 201, held just six weeks before the first publicly acknowledged COVID-19 cases were announced, involved the Gates Foundation, WEF and the Johns Hopkins Center for Health Security. Global financial institutions, media organizations and intelligence agencies also participated.

The simulation focused on the response to a novel coronavirus outbreak by governments, pharmaceutical companies, media outlets and social media platforms.

Ji said the Epstein Files don’t show that COVID-19 was planned or manufactured, or that Event 201 led to COVID-19. Instead, they prove that “the institutional infrastructure to capitalize on exactly this kind of crisis was already built, tested, staffed, and insured.”

This article was originally published by The Defender — Children’s Health Defense’s News & Views Website under Creative Commons license CC BY-NC-ND 4.0. Please consider subscribing to The Defender or donating to Children’s Health Defense.

No, Al-Jazeera, Climate Change Hasn’t Altered African Flood and Drought Patterns

By Anthony Watts | Climate Realism | February 3, 2026

Al Jazeera (AJ) recently published an article titled “Drought in the east, floods in the south: Africa battered by climate change” by Haru Mutasa, in which the reporter details recent experiences of drought in East Africa and flooding in southern Africa, asserting that those weather events are proof that climate change is battering the continent. This is false. The article relies on flimsy content such as personal observation, interviews, and evocative imagery to imply causation, ignoring data and trends that show no appreciable changes in flood or drought patterns over time.

Mutasa asserts that “rising seas and intensifying storms” and shifting rainfall patterns are already devastating livelihoods, citing the author’s personal field observations as evidence of a broader climate crisis. Readers are shown photos of dry riverbeds, dead livestock, submerged neighborhoods, and distressed residents, then invited to connect these scenes directly to global warming. The emotional impact is real, but emotion is not evidence.

Let us start with AJ’s most basic error: weather is not climate. Climate is defined by long-term patterns measured over decades, typically 30 years or more. A drought in one region followed by floods in another over a few weeks or months says nothing about a durable climate trend. Africa’s climate has always been highly variable, with sharp swings driven by ocean–atmosphere cycles such as the El Niño–Southern Oscillation and the Indian Ocean Dipole. Short-term extremes—sometimes back-to-back—are a known feature of the region, not a recently discovered diagnostical proof of climate change.

The historical record bears this out. Southern and eastern Africa experienced severe droughts and catastrophic floods long before modern greenhouse gas emissions rose. Mozambique’s Limpopo River basin, featured prominently in the article’s flood imagery. The area has a long history of major inundations, including the devastating 2000 Mozambique floods, which displaced hundreds of thousands and occurred during a strong El Niño year. East Africa’s Horn has likewise seen repeated, multi-year droughts throughout the twentieth century, interspersed with episodes of extreme rainfall. These precedents matter because they show that today’s events are consistent with a long pattern of variability rather than proving a novel climate regime.

In fact, science has shown a 50-year seasonal variability across centuries in East African droughts and floods recorded in lake sediments.

When measured data are consulted instead of anecdotes, the alarm bells fade. Climate at a Glance summarizes the global evidence in “Floods” and “Drought,” explaining that there is low confidence in any increasing trend of global flood frequency or magnitude and that drought trends are regionally mixed, not universally worsening. These conclusions align with the cautious language used by the Intergovernmental Panel on Climate Change (IPCC) Sixth Assessment Report (AR6), which emphasizes uncertainty and regional variability rather than blanket claims of intensification.

The AJ article substitutes interviews for analysis. Quoting local residents and aid workers about hardship may document suffering, but it does not diagnose cause. Infrastructure deficits, land-use changes, deforestation, river management, dam releases upstream, population growth in floodplains, and limited early-warning systems all play decisive roles in disaster outcomes across Africa. Climate Realism has repeatedly shown how media coverage overlooks these factors while attributing complex events to climate change by default, as catalogued in its Africa-related reporting and extreme-weather analyses accessible via Climate Realism’s search on floods and droughts and Climate Realism’s coverage of drought claims.

Even within the article’s narrative, local management issues loom large. Mutasa notes dam releases in South Africa’s Mpumalanga province sent additional water downstream into Mozambique—an operational decision with immediate hydrological consequences that has nothing to do with global temperature or climate change. Treating such factors as footnotes while elevating climate change as the primary driver of flooding misinforms readers about where real risk reduction lies.

Serious climate reporting distinguishes between events and trends, and between personal experiences and measured evidence.

By presenting interviews and moment-in-time scenes as confirmation of a continent-wide climate verdict, Al Jazeera is misleading its audience by making a causal connection where data show none. Africa’s vulnerability to climate and weather extremes is real, but the causes are multifaceted and long-standing. Ignoring historical precedents and measured trends in favor of an alarming narrative certainty does not inform the public; it misleads it with false headlines.

Bad Science, Big Consequences

How the influential 2006 Stern Review conjured up escalating future disaster losses

By Roger Pielke Jr. | The Honest Broker | February 2, 2026

For those who haven’t observed climate debates over the long term, today it might be hard to imagine the incredible influence of the 2006 Stern Review on The Economics of Climate.1

The Stern Review was far more than just another nerdy report of climate economics. It was a keystone document that reshaped how climate change was framed in policy, media, and advocacy, with reverberations still echoing today.

The Review was commissioned in 2005 by the UK Treasury under Chancellor Gordon Brown and published in 2006, with the aim of assessing climate change through the lens of economic risk and cost–benefit analysis. The review was led by Sir Nicholas Stern, then Head of the UK Government Economic Service and a former Chief Economist of the World Bank, from the outset giving the effort unusual stature for a policy report.

As the climate issue gained momentum in the 2000s, the Review’s conclusions that climate change was a looming emergency and that virtually any cost was worth bearing in response were widely treated as authoritative. The Review shaped climate discourse far beyond the United Kingdom and well beyond the confines of economics.

One key aspect of the Stern Review overlaps significantly with my expertise — The economic impacts of extreme weather. In fact, that overlap has a very surprising connection which I’ll detail below, and explains why back in 2006 I was able to identify the report’s fatal flaws on the economics of extreme weather in real time, and publish my arguments in the peer-reviewed literature soon thereafter.

But I’m getting ahead of myself.

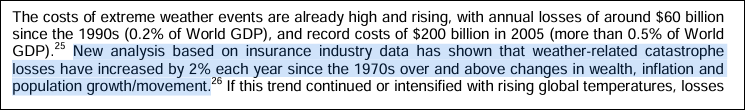

I have just updated through 2025 the figure below that compares the Stern Review’s prediction of post-2005 increases in disaster losses as a percentage of global GDP with what has actually transpired.

Specifically, the figure shows in light grey the Stern Review’s prediction for increasing global disaster losses, as a percentage of GDP, from 2006 through 2050.2 These values in grey represent annual average losses, meaning that over time for the prediction to verify, about half of annual losses would lie above the grey bars and about half below.

The black bars in the figure show what has actually occurred (with details provided in this post last week). You don’t need fancy statistics to see that the real world has consistently undershot the Stern Review’s predictions over the past two decades.

The Stern Review forecast rapidly escalating losses to 2050, when losses were projected to be about $1.7 trillion in 2025 dollars. The Review’s prediction for 2025 was more than $500 billion in losses (average annual). In actuality losses totaled about $200 billion in 2025.

The forecast miss is not subtle.

How did the Stern Review get things so wrong?

The answer is also not subtle and can be summarized in two words: Bad science.

Let’s take a look at the details. The screenshot below comes from Chapter 5 of the Review and explains its source for developing its prediction, cited to footnote 26.

As fate would have it, footnote 26 goes to a white paper that I commissioned for a workshop that I co-organized with Munich Re in 2006 on disasters and climate change.

That white paper — by Muir-Wood et al. — is the same paper that soon after was played the starring role in a fraudulent graph inserted into the 2007 IPCC report (yes, fraudulent). You can listen to me recounting that incredible story, with rare archival audio.

But I digress . . . back to The Stern Review, which argued:

If temperatures continued to rise over the second half of the century, costs could reach several percent of GDP each year, particularly because the damages increase disproportionately at higher temperatures . . .

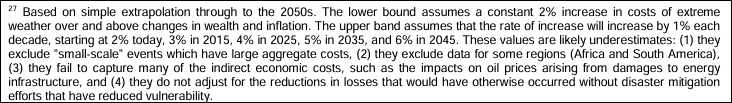

The report presented its prediction methodology in the footnote 27, shown in full below, which says: “These values are likely underestimates.”

Where do these escalating numbers come from? Who knows.

They appear to be just made up out of thin air. The predictive numbers do not come from Muir-Wood et al., who do not engage in any form of projection.

The 2% starting point for increasing losses — asserted in the blue highlighted passage in the image above — also does not appear in Muir-Wood et al. which in fact says:

When analyzed over the full survey period (1950 – 2005) the year is not statistically significant for global normalized losses. . . For the more complete 1970-2005 survey period, the year is significant with a positive coefficient for (i.e. increase in) global losses at 1% . . .

The Stern Review seems to have turned 1% into 2% and failed to acknowledge that over the longer-period 1950 to 2005, there was no increasing trend in losses as a proportion of GDP. The escalating increase in annual losses from 2% to 3%, 4%, 5%, 6% every decade is not supported in any way in the Stern Review, nor is it referenced to any source.

When the Stern Review first came out, I noticed this curiosity right away, and did what I thought we scholars were expected to do when encountering bad science with big implications — I wrote a paper for peer review.

My paper was published in 2007 and clearly explained the Muir-Wood et al. and other significant and seemingly undeniable errors in the Stern Review.

Pielke Jr, R. (2007). Mistreatment of the economic impacts of extreme events in the Stern Review Report on the Economics of Climate Change. Global Environmental Change, 17(3-4), 302-310.

I explained in that paper:

This brief critique of a small part of the Stern Review finds that the report has dramatically misrepresented literature and understandings on the relationship of projected climate changes and future losses from extreme events in developed countries, and indeed globally. In one case this appears to be the result of the misrepresentation of a single study. This cherry picking damages the credibility of the Stern Review because it not only ignores other relevant literature with different conclusions, but it misrepresents the very study that it has used to buttress its conclusions.

Over my career in research, I’ve had some hits and some misses, but I’m happy to report that I got this one right at the time and it has held up ever since. Of course, perhaps a more significant outcome of this episode, and a key part of my own education in climate science, is that my paper was resoundingly ignored.

One reason that science works is that scientists share a commitment to correct errors when they are found in research, bringing forward reliable knowledge and leaving behind that which doesn’t stand the test of time.

I learned decades ago that in areas where I published, self-correction was often slow to work, if not just broken. Over the decades that pathological characteristic of key areas of climate science has not much improved (e.g., see this egregious example).

The Stern Review helped to launch climate change into top levels of policy making around the world. Further, we can draw a straight line from the Review to the emergence of (often scientifically questionable) “climate risk” in global finance a decade later. It still rests on a foundation of bad science.

1 My ongoing THB series on insurance and “climate risk” in finance prompted me to revisit the 2006 Stern Review, hence this post.

2 Note that the Review explicitly referenced the tabulation of global economic losses from extreme weather events as tabulation by Munich Re, which is the same dataset that I often use, such as in last week’s THB post on global disaster losses. The comparison here is thus apples to apples.

When Threats Replace Evidence

What an Australian Newspaper Article Reveals About the Vaccine Compliance Machine

Lies are Unbekoming | February 5, 2026

The Sydney Morning Herald wants you to know the penalties. Doctors and nurses who falsify vaccination records face suspension, deregistration, and jail. Parents who seek them out face fraud investigations through Services Australia. The article names specific dollar amounts ($2,500 per child), quotes the Health Minister expressing shock and outrage, and reminds readers that AHPRA—the regulatory body that controls whether medical professionals can earn a living—is watching.

The article reads less as journalism than as a warning to anyone considering dissent.

The Herald is one of Australia’s oldest and most influential newspapers, the rough equivalent of the New York Times in reach and establishment credibility. When it publishes a piece like this, it speaks with institutional authority. The January 2026 article, “Parents are paying $2500 to falsify vaccine records,” arrives at a particular moment in Australian public health: vaccine uptake has “stalled below national targets,” mandate enforcement is creating a black market for exemptions, and parents are organising in Facebook groups 40,000 members strong.

To understand the context, American readers need to know what Australia built. Between 2014 and 2019, five Australian states—New South Wales, Victoria, Queensland, South Australia, and Western Australia—rolled out “no jab, no play” laws, which bar unvaccinated children from childcare and preschool enrollment entirely. The only exemptions are medical, and these require documented life-threatening allergic reactions or severe immunocompromise—conditions so narrow that most families cannot qualify no matter their concerns.

The coercion is not subtle—and it violates the government’s own rules. Australia’s Immunisation Handbook states that valid consent must be given “voluntarily in the absence of undue pressure, coercion or manipulation.” Denying a child access to childcare unless the parents comply is textbook duress. The government has built an enforcement apparatus that fails its own stated ethical standards.

The system was designed to make non-compliance economically devastating and socially impossible. And for years, it worked. But now that system is encountering mass resistance, and the Herald article’s purpose is to make examples—to signal what happens to doctors who help parents escape a coercive system, and to parents who refuse to comply.

Buried beneath the threats is a dead baby. Riley Hughes, 32 days old, is the emotional payload. His story opens the piece, provides the moral frame, and transforms regulatory enforcement into righteous protection of the innocent. Without Riley, this article is just an inventory of punishments. With Riley, non-compliance becomes child murder.

The story requires examination.

Riley developed “mild cold symptoms” at three weeks old. His mother took him to a doctor, who said he appeared “perfectly fine.” When he stopped feeding, she took him to the children’s hospital. By day three, doctors “suspected” whooping cough. By day four, he had pneumonia. By day five, he was on life support. He died at 32 days old. Riley died in February 2015—eleven years before this article was published. The Herald reached back over a decade to find its dead baby.

The article states that “The Bordetella pertussis bug had overwhelmed his tiny body.” This is presented as fact. But reading carefully, the diagnosis was never confirmed—doctors “suspected” whooping cough. The journalist’s assertion that pertussis killed Riley is not attributed to any medical source. It is simply declared.

More striking is what the article omits entirely: what happened during those five days of hospitalisation. What interventions were administered to a three-week-old infant? What antibiotics? What was the “life support” that preceded his death? The hospital’s role in Riley’s deterioration is invisible. The medical system appears only as the place where heroic efforts were made to save him from the disease that (we are told) the unvaccinated community gave him.

The article describes Riley as “too young” to be vaccinated against whooping cough, which is given at six to eight weeks in Australia. But it does not mention that under Australian guidelines, Riley would have received the Hepatitis B vaccine within 24 hours of birth. He was not an unvaccinated child. He was a vaccinated child who had not yet received this particular vaccine.

If Riley had been completely unvaccinated, that would be the story. “Unvaccinated baby dies of preventable disease” writes itself. Instead, the article performs a subtle shift: a vaccinated infant dies after five days of hospital intervention, and an entire class of people—parents who refused to vaccinate—are scapegoated to protect the system that failed him.

None of this can be stated with certainty. We do not have Riley’s medical records. We do not know what drugs were administered, what procedures were performed, what his body endured in those five days. But that is precisely the point: neither does the Herald, and neither do its readers. The article presents a story with a hole at its centre and fills that hole with a villain—the unvaccinated community—while the institution that actually had custody of Riley during his decline remains unexamined.

What we do know: Riley was vaccinated. He received the Hepatitis B vaccine at birth, as per Australian protocol. He then spent five days in hospital care before he died. This is a vaccinated child who died after days of medical intervention—and the article repurposes his death as a case against vaccine refusal.

The mother, Catherine Hughes, is quoted: “My son would likely be alive today if everyone in my community had been fully vaccinated against whooping cough.”

This is a grieving mother’s belief, given to her by a medical system that needed someone to blame. She has since founded the Immunisation Foundation of Australia and become a professional advocate for vaccination mandates. What the Herald does not disclose: as journalist Alison Bevege has documented, her foundation received $170,000 from Sanofi in 2023 and $100,000 from GSK in 2025. Hughes herself appears in GSK press releases promoting their products. The article presents her as a spontaneous voice of bereaved motherhood. She is a paid pharmaceutical spokesperson.

The article’s foundational premise—that unvaccinated children endanger the community—is not merely unexamined. Even within the mainstream framework of germ theory and disease transmission, the published science contradicts it.

In 2014, researchers at the FDA published a study using baboons to examine how the acellular pertussis vaccine actually works. The results, within the germ theory framework the researchers operated in, were unambiguous: vaccinated baboons exposed to Bordetella pertussis showed few symptoms but became colonised with the bacteria. They were then placed in cages with unvaccinated baboons—and by the researchers’ own account, the vaccinated animals passed the bacteria to the unvaccinated ones. The study’s conclusion: “acellular pertussis vaccines protect against disease but fail to prevent infection and transmission.”

A 2015 study by Althouse and Scarpino went further. Using epidemiological, genetic, and mathematical modelling data, they argued that asymptomatic spread from vaccinated individuals “provides the most parsimonious explanation for the observed resurgence of B. pertussis in the US and UK.” Vaccinated individuals who show no symptoms carry and spread the bacteria—according to the very framework the public health establishment operates within. The authors noted that this also explains the documented failure of “cocooning”—the strategy of vaccinating family members to protect newborns. By their own logic, it doesn’t work because the vaccinated family members become silent carriers.

Even by the establishment’s own standards, the pertussis vaccine does not prevent colonisation. It does not prevent spread. What it does, according to their own researchers, is suppress symptoms in the vaccinated individual while allowing them to pass the bacterium to others, including infants too young to be vaccinated.

These are peer-reviewed studies published in the Proceedings of the National Academy of Sciences and BMC Medicine. The FDA conducted the baboon study.

Meanwhile, within this same framework, the bacterium has apparently evolved under vaccine pressure. A 2014 Australian study found that between 30% and 80% of circulating pertussis strains during a major outbreak were “pertactin-deficient”—lacking the protein the vaccine targets. The authors observed that “pertussis vaccine selection pressure, or vaccine-driven adaptation, induced the evolution of B. pertussis.”

The pertussis vaccine suppresses symptoms. Whether it also creates asymptomatic carriers who spread an apparently evolving pathogen, as the establishment’s own researchers claim, remains their narrative to defend. But even within that narrative, the unvaccinated are not the problem—the vaccine is.

When the Herald article quotes a professor warning about “one of the kids there has whooping cough or measles, and it spreads through the childcare, putting your child at risk,” the establishment’s own science suggests the spreader is more likely to be a vaccinated child with no visible symptoms than an unvaccinated child who would be home sick.

Even within the establishment’s own framework, if Riley had pertussis, the most likely source—according to their own research on asymptomatic carriage—would be a vaccinated person, perhaps someone in his own family who had been “cocooned” as the health authorities recommend. The article does not explore this possibility. It cannot, because the entire enforcement apparatus rests on the premise that the unvaccinated are the danger.

The article is not confused about the science. It is not interested in the science. Its function is compliance enforcement, and its vectors are specific.

The first vector targets medical professionals. The article names a Perth nurse charged with fraudulently recording vaccines—though the case was dropped for lack of evidence. It names a Victorian doctor whose registration was suspended. It quotes AHPRA warning that practitioners found acting fraudulently face suspension or deregistration. The message to any doctor or nurse who might help parents escape the system: we are watching, and we will destroy your career.

This is not new. In December 2020, Dr. Paul Thomas, a Portland paediatrician who had practiced for 35 years, published a peer-reviewed study comparing health outcomes in vaccinated versus unvaccinated children in his practice. The data showed unvaccinated children were significantly healthier across multiple metrics. Within days of publication, the Oregon Medical Board issued an “emergency order” suspending his licence, claiming his “continued practice constitutes an immediate danger to the public.”

The Board’s letter accused Thomas of “fraudulently” asserting that his vaccine-friendly protocol improved health outcomes—the very thing his peer-reviewed data demonstrated. His paper was later retracted under circumstances its authors describe as dubious. Thomas eventually surrendered his licence rather than continue fighting the Board’s conditions, which prohibited him from consulting with parents about vaccines or conducting further research.

The pattern is consistent. Produce evidence that challenges the orthodoxy, lose your ability to practice medicine. The threat in the Herald article is not abstract. Medical professionals in Australia have seen what happens to dissenters.

The second vector targets parents. The article reminds readers that Services Australia investigates Medicare and Centrelink fraud. Parents who pay for falsified records are not just endangering children (according to the article’s framing)—they are committing crimes against the Commonwealth. The article implies that seeking workarounds exposes parents to criminal liability, transforming a decision about their child’s medical care into a prosecutable offence.

The third vector is reputational. The article quotes the Health Minister: “I am shocked and appalled that any doctor or nurse would falsify vaccination records.” Parents in the Facebook groups are framed as reckless conspirators, their concerns about vaccine safety transmuted into selfish endangerment of babies like Riley. The 2025 study cited in the article notes that 47.9% of parents with unvaccinated children “did not believe vaccines are safe” and 46.7% “would not feel guilty if their unvaccinated child got a vaccine-preventable disease.” These statistics are presented as moral indictments.

What the article does not mention: the same study found that nearly 40% of these parents “did not believe vaccinating children helps protect others in the community.” Given the published science on pertussis—even within the establishment’s own framework—these parents have a point.

In 2004, Glen Nowak, the CDC’s director of media relations, gave a presentation to the National Influenza Vaccine Summit titled “Increasing Awareness and Uptake of Influenza Immunization.” His slides explained that vaccine demand requires “concern, anxiety, and worry” among the public. “The belief that you can inform and warn people, and get them to take appropriate actions or precautions with respect to a health threat or risk without actually making them anxious or concerned,” Nowak explained, “is not possible.”

His recipe for demand creation included medical experts stating “concern and alarm” and predicting “dire outcomes” if people don’t vaccinate. References to “very severe” and “deadly” diseases help motivate behaviour. Pandemic framing is useful.

The Herald article follows this template precisely. It opens with a dead baby. It features a professor warning about diseases “spreading through childcare.” The Health Minister invokes “serious complications, hospitalisation, and in some cases, death.” The 14 measles cases since December are presented ominously, without context about how many of those cases involved vaccinated individuals or resulted in any serious illness.

The article also quotes Dr. Niroshini Kennedy, president of the paediatrics and child health division at the Royal Australasian College of Physicians, warning about “vaccine hesitancy.” What the article does not mention: the RACP has a foundation that partners with GSK, a major pertussis vaccine manufacturer. The expert voice warning about hesitancy has institutional financial ties to a company that profits from vaccination.

The financial stakes are not abstract. GSK’s pertussis products Boostrix and Infanrix generated $2.3 billion in 2023. Sanofi’s pertussis vaccine revenue hit $1 billion in 2024, up 10.8% on the previous year, driven by booster demand. When the Herald runs a story demonising vaccine refusers, it serves an industry measured in billions.

The article acknowledges, briefly, that public health experts warned in 2019 that “vaccine mandates can backfire, and simply induce parents to seek loopholes, and, worse, fuel negative attitudes towards vaccination.” This warning has proven accurate. Australia’s escalating mandate regime has not produced the desired compliance. It has produced a $2,500 black market and Facebook groups with 40,000 members sharing strategies for resistance.

The system’s response is not to reconsider the mandates. It is to escalate enforcement and amplify fear. The article is part of that escalation.

The escalation itself is diagnostic. Systems that can defend their policies on evidence do not need to inventory punishments in the newspaper. They do not need to reach back eleven years for a dead baby. They do not need AHPRA warnings and Health Minister quotes and reminders about criminal prosecution. They make their case and let the data persuade.

What the Herald article reveals, beneath its institutional authority, is a system that has run out of persuasive tools. The sequence tells the story: first came the information campaigns, which did not produce sufficient uptake. Then came the mandates—no jab, no play—which produced compliance but also resistance. Then came enforcement against the resisters, which produced a black market. Now comes the threat display in the national press, designed to frighten the black market into submission. Each escalation is a concession that the previous level of coercion failed. Each one is more desperate than the last.

A system confident in its science would welcome questions. A system confident in its products would publish the safety data that parents are asking for. A system confident in its outcomes would point to the evidence and let parents decide. This system prosecutes nurses, deregisters doctors, denies children access to childcare, and runs articles designed to make examples of anyone who dissents. That is not the behaviour of an institution operating from strength. It is the behaviour of an institution that knows it cannot survive scrutiny—and is scrambling to ensure that scrutiny never arrives.

What parents are waking up to, slowly and in growing numbers, is that the fundamental promise—vaccinate your children and they will be protected, vaccinate enough children and the community will be protected—is not supported by the evidence, even within the framework that public health authorities operate in. What they are discovering is that asking questions produces hostility rather than answers. What they are learning is that doctors who support informed consent are being systematically removed from practice, leaving parents with no one in the medical system willing to have honest conversations.

The 40,000 parents in that Facebook group are not there because they read misinformation. They are there because they asked questions their doctors couldn’t answer, or because their child had a reaction that was dismissed, or because they did the research the system told them not to do and found that the confident assurances didn’t match the published science.

The Herald article treats these parents as a problem to be solved through enforcement. It does not entertain the possibility that they might be responding rationally to real information. It cannot, because that would require examining the science—and the science does not support the policy.

Australia has constructed a system where parents lose childcare access if they do not vaccinate, where doctors lose their licences if they support parental choice, where asking questions about vaccine safety is framed as “misinformation,” and where a dead baby is deployed to transform regulatory non-compliance into moral monstrosity.

The article calls this public health. A more accurate description: this is what happens when a policy built on faulty premises meets a population that is beginning to see through it. Unable to defend the science, the system defends itself through threats, fear, and the weaponisation of grief.

Riley Hughes deserved better than to become a propaganda tool for the companies that fund his mother’s foundation. The parents seeking exemptions deserve honest information about what vaccines can and cannot do. The doctors trying to practice informed consent deserve to keep their licences.

None of them are served by an article whose purpose is to frighten dissenters into silence.

The system is telling parents: comply or be punished, and don’t ask questions. The parents are responding: we have questions, and your threats are not answers.

That tension will not be resolved by more enforcement. It will be resolved when someone in authority has the courage to address the questions honestly—or it will continue to escalate until the system’s credibility collapses entirely.

Forty thousand parents in one Facebook group suggest which direction this is heading.

References

The Article Under Discussion:

Olaya, K. (2026, January 31). Parents are paying $2500 to falsify vaccine records. It’s endangering babies like Riley. The Sydney Morning Herald. https://www.smh.com.au/national/parents-are-paying-2500-to-falsify-vaccine-records-it-s-endangering-babies-like-riley-20260127-p5nxah.html

Catherine Hughes Financial Disclosures:

Bevege, A. (2026, February 4). ‘Baby-Killers’ – Nine Newspapers falsely claim unvaccinated people killed a baby by spreading whooping cough. Letters from Australia. https://alisonbevege.substack.com/

RACP-GSK Partnership:

GSK Australia. RACP Foundation partnership announcement. Referenced in Bevege (2026).

Vaccine Revenue Figures:

GSK. (2024). Annual Report 2023. Boostrix and Infanrix/Pediarix revenue figures.

Sanofi. (2025). Fourth Quarter 2024 Earnings Report. Polio/pertussis/HiB vaccine sales.

Pertussis Vaccine and Asymptomatic Carriage:

Warfel, J. M., Zimmerman, L. I., & Merkel, T. J. (2014). Acellular pertussis vaccines protect against disease but fail to prevent infection and transmission in a nonhuman primate model. Proceedings of the National Academy of Sciences, 111(2), 787-92. https://doi.org/10.1073/pnas.1314688110

Althouse, B. M., & Scarpino, S. V. (2015). Asymptomatic transmission and the resurgence of Bordetella pertussis. BMC Medicine, 13(1), 146. https://doi.org/10.1186/s12916-015-0382-8

Pertussis Vaccine Evolution and Waning Immunity:

Lam, C., Octavia, S., et al. (2014). Rapid increase in pertactin-deficient Bordetella pertussis isolates, Australia. Emerging Infectious Diseases, 20(4), 626-33. https://doi.org/10.3201/eid2004.131478

Tartof, S. Y., Lewis, M., et al. (2013). Waning immunity to pertussis following 5 doses of DTaP. Pediatrics, 131(4), e1047-52. https://doi.org/10.1542/peds.2012-1928

van Boven, M., Mooi, F. R., et al. (2005). Pathogen adaptation under imperfect vaccination: implications for pertussis. Proceedings of the Royal Society B, 272(1572), 1617-24. https://doi.org/10.1098/rspb.2005.3108

Dr. Paul Thomas Case:

Oregon Medical Board. (2020). In the Matter of: Paul Norman Thomas, MD. License Number MD15689: Order of Emergency Suspension. https://omb.oregon.gov/Clients/ORMB/OrderDocuments/e579dd35-7e1b-471f-a69a-3a800317ed4c.pdf

Lyons-Weiler, J., & Thomas, P. (2020). Relative Incidence of Office Visits and Cumulative Rates of Billed Diagnoses Along the Axis of Vaccination. International Journal of Environmental Research and Public Health, 17(22), 8674. [Retracted 2021]

Hammond, J. R. (2021). The War on Informed Consent: The Persecution of Dr. Paul Thomas by the Oregon Medical Board. Skyhorse Publishing.

CDC Fear-Based Messaging:

Nowak, G. (2004). Increasing Awareness and Uptake of Influenza Immunization. Presentation at the National Influenza Vaccine Summit, Atlanta, GA.

Vaccine Mandates and Backfire Effects:

Ward, J. K., et al. (2019). France’s citizen consultation on vaccination and the challenges of participatory democracy in health. Social Science & Medicine, 220, 73-80.

Suppression of Vaccine Dissent:

Martin, B. (2015). On the Suppression of Vaccination Dissent. Science and Engineering Ethics, 21(1), 143-57. https://doi.org/10.1007/s11948-014-9530-3

Australia’s No Jab, No Play Laws:

Australian state governments. No Jab, No Play legislation (2014-2019). New South Wales, Victoria, Queensland, South Australia, Western Australia.

Australian Immunisation Handbook — Consent Requirements:

Australian Government Department of Health. Australian Immunisation Handbook. Section: Valid Consent. https://immunisationhandbook.health.gov.au/

Is Canada Really Warming?

By Tom Harris | American Thinker | January 30, 2026

Is Canada really warming at double the global average rate, as the Canadian government says it is? A new report says no, because the data Environment and Climate Change Canada (ECCC) uses are apparently corrupted by fundamental mistakes, mistakes so severe that when corrected, all the supposed warming of the past six or seven decades vanishes.

Given that Canada represents a large fraction of global land surface area, one naturally wonders if the world is warming at anything like we are told it is.

This discovery should have generated mainstream media headlines across Canada. After all, the mistakes in the Canadian temperature data were discovered over four years ago by Dr. Joseph Hickey, a highly qualified Canadian data scientist, and the group I lead, the International Climate Science Coalition – Canada, has been publicizing the story for the past month.

But don’t expect mainstream media in the Great White North to say anything about this. Most of Canada’s press are heavily subsidized by the federal and provincial governments, which would probably not appreciate the story being covered. Dr. Dave Snow, Associate professor in political science at the University of Guelph, writes:

“Canada has created a bevy of policies, ranging from subsidies to tax breaks to mandated contributions from foreign tech companies, that undoubtedly constitute a major portion of news outlets’ revenue and journalists’ salaries — potentially upwards of 50 percent.”

Publicly questioning government narratives on something as significant as climate change is a risky proposition for any editor when doing so leaves their paymasters with egg on their faces.

Here’s what governments in Canada — and the media outlets reliant on their largess — would rather you never heard. On December 23, the report “Artificial stepwise increases in homogenized surface air temperature data invalidate published climate warming claims for Canada” was released by Dr. Joseph Hickey, a data scientist with a PhD in Physics. The report was published by CORRELATION Research in the Public Interest regarding a significant error in Canada’s temperature data.

Using the data from ECCC for hundreds of stations across the country, scientists had previously calculated that the surface air temperature has increased 1-2 degrees Celsius over the past six to seven decades in Canada. Yet in 1998, the exact year in which 72 Canadian reference climatological stations were first added to the Global Climate Observing System, a sudden stepwise increase of approximately 1 degree Celsius occurred at most stations across the country.

Numerous studies in scientific literature assert that sudden temperature jumps like this are not due to real climactic change but instead are caused by temperature measurement artifacts corrupting the data. They contend that this data should therefore be removed from the record. Even though one of the studies explaining this was authored by Dr. Lucie A. Vincent, the senior Environment Canada Research Climatologist, the temperature jump was left in ECCC’s data and is still there to this day. Hickey concludes, “The reported climate warming of Canada appears to be entirely from a temperature measurement artifact.”

Hickey first discovered this in 2021 when he was as an analyst for the Bank of Canada and so was barred from sharing his findings with the public. After leaving the bank, he secured his communications with Environment Canada via an access to information, which is why we know what happened next.

In that year Hickey alerted Environment Canada to the problem and explained in detail to Vincent that the sharpness of the temperature increase, and its magnitude, indicate that it is not due to real climactic change. He also laid out a thorough analysis of potential sources of the artifact, which could include land use changes and instrumentation changes. Both of these could easily cause a one-degree shift in the temperature data. Moreover, he explained, “there are no other similar large and geographically widespread discontinuities in the AHCCD dataset [Environment Canada’s flagship temperature dataset] at other years.” This increase could be responsible for almost all the claimed warming calculated for Canada over the past six or seven decades.

Vincent essentially brushed him off, did not provide an explanation for the step increase, and said that the shift “is probably due to climate variability only.”

So, Canada is spending hundreds of billions of dollars to fight climate change largely based on data that the government scientist most involved in its generation can only say is “probably” indicative of warming.

Hickey was not the only Bank of Canada employee to find fault with ECCC’s temperature data. In his December report, he writes:

“On December 7, 2020, Bank of Canada Economist Julien McDonald-Guimond sent an email to Environment Canada researchers with an inquiry about the … daily temperature records, noting he had found some cases in which the daily minimum temperature was greater than the daily maximum temperature for the same day and for the same AHCCD station.”

In fact, there were more than 10,000 instances of days for which the daily minimum temperature was greater than the daily maximum temperature.

Environment Canada Climate Data Analyst Megan Hartwell replied to McDonald-Guimon, saying that “We were quite surprised by the frequency of the issue you reported, and have taken some time to go through the data carefully.”

That ECCC were surprised by McDonald-Guimond’s finding is cause enough to worry. But the fact that they now “have taken some time to go through the data carefully” begs the question: didn’t they go through the data carefully before releasing it the first time?

Environment and Climate Change Canada have yet to respond to Hickey’s December report. They have some explaining to do.

Tom Harris is Executive Director of International Climate Science Coalition – Canada.

Climate Scientist Who Predicted End Of “Heavy Frost And Snow” Now Refuses Media Inquiries

By P Gosselin | No Tricks Zone | February 3, 2026

More than two decades ago, renowned climate scientist Mojib Latif of Germany’s Max Planck Instiute for Meterology, based in Hamburg, warned the climate-ambulance chasing Der Spiegel that, due to global warming, Germany would likely no longer experience harsh winters with heavy frost and snow as it had in previous decades.

In light of the current severe winter weather in Germany, Latif’s statements are facing renewed scrutiny. An article appearing in the Berliner Zeitung notes that Latif’s prophecy has “aged poorly” and he appears to want to have nothing to do with them.

Hiding from the media

According to the Berliner Zeitung, the former Max Planck Institute scientist has recently stopped responding to media inquiries regarding his past claims. Critics argue that such drastic predictions damage the credibility of climate science, while others point out that extreme weather events—including intense cold snaps—can still occur within the broader context of climate change.

No Easter snow as well

Latif also claimed he recalled snow in the past occurring at Easter time, implying this no longer happens today. But that too was a false claim. perhaps prof. Latif will answer phone calls in April?

‘Fact-checking’ as a disinformation scheme: The Brazilian case of Agência Lupa

By Raphael Machado | Strategic Culture Foundation | February 7, 2026

Since the term “fake news” emerged in the world of political journalism, we have been confronted with a new angle through which the establishment attempts to reinforce its hegemony in the intellectual and informational sphere: by simulating ideology as science, data, or fact.

A fundamental aspect of hegemonic liberalism in the “rival-less” post-Cold War world is the transition of ideology into the diffuse realm of pure facticity. What decades earlier was clearly identified as belief comes to be taken as “data,” that is, as indisputable, not open for debate. This is the case, for example, with the myth of “democracy,” the myth of “human rights,” the myth of “progress,” and the myth of the “free market.” And today, we could extend this to the dictates of “gender ideology” and a series of other beliefs of ideological foundation, which are nevertheless taken as scientific facts.

“Fact-checking” has thus become one of the many mechanisms used by the establishment to reinforce systemic “consensus” in the face of the emergence of alternative perspectives following the popularization of the internet and independent journalism. The “authoritative” distinction made by a self-declared “independent” and “respectable” agency between what would be “fact” and what would be “fake news” has become a new source of truth.

Some liberal-democratic governments, like the USA, have gone so far as to create special departments dedicated to “combating fake news,” thus acting as authentic “Ministries of Truth” of Orwellian memory.

However, even within the “independent” sphere, we rarely encounter genuine independence. On the contrary, in fact, Western “fact-checking agencies” tend to be well-integrated into the constellation of NGOs, foundations, and associations of the non-profit industrial complex, which, in turn, is permeated by the money of large corporations and the interests of liberal-democratic governments. Even their staff tend to be revolving doors for figures coming from the NGO world, mainstream journalism, and state bureaucracy.

Although the phenomenon is of Western origin, Brazil is not exempt from it. “Fact-checking agencies” also operate here — most of them engaged in the same types of disinformation operations as the governments, newspapers, and NGOs that sponsor them.

A typical example is Agência Lupa.

Founded in 2015, its founder Cristina Tardáguila previously worked for another disinformation apparatus disguised as “fact-checking,” Preto no Branco, funded by Grupo Globo (founded and owned by the Marinho family, members of which are mentioned in the Epstein Files). Lupa was financially boosted by João Moreira Salles, from the billionaire banker family Moreira Salles (of Itaú Unibanco).

Despite claiming independence from the editorial control of Revista Piauí, also controlled by the Moreira Salles family, Agência Lupa continues to be virtually hosted by Piauí’s resources, where Tardáguila worked as a journalist from 2006 to 2011. Furthermore, she also received support from the Instituto Serrapilheira, also from the Moreira Salles family, during the health crisis to act as a mechanism for imposing the pandemic consensus in what was one of the largest social experiments in human history.

In parallel, it is relevant to mention that the same João Moreira Salles was involved decades ago in a scandal after it was revealed that he had financed “Marcinho VP,” one of the leaders of the drug trafficking organization Comando Vermelho. Moreira Salles made a deal with the justice system to avoid being held accountable for this involvement.

Tardáguila was also the deputy director of the International Fact-Checking Network, an absolutely “independent” “fake news combat” network, yet funded by institutions such as the Open Society, the Bill & Melinda Gates Foundation, Google, Meta, the Omidyar Network, and the US State Department, through the National Endowment for Democracy.

Today Tardáguila no longer runs Lupa, but her “profile” on the official page of the National Endowment for Democracy (notorious funder of color revolutions and disinformation operations around the world) states that she is quite active at the Equis Institute, which counts among its funders the abortion organization Planned Parenthood, and aims to conduct social engineering against “Latino” populations.

Lupa is currently headed by Natália Leal. Contrary to the narrative of “independence,” the reality is that she has worked for several Brazilian mass media outlets, such as Poder360, Diário Catarinense, and Zero Hora, in addition to also writing for Revista Piauí, from the same Moreira Salles. Leal is less “internationally connected” than Tardáguila, but she was “graced” with an award from the International Center for Journalists, an association of “independent journalists” that, in fact, is also funded by the US State Department’s National Endowment for Democracy, the Bill & Melinda Gates Foundation, Meta, Google, CNN, the Washington Post, USAID, and the Serrapilheira Institute itself, also from Moreira Salles.

Quite clearly, it is somewhat difficult to take seriously the notion that Lupa would have sufficient autonomy and independence to act as an impartial arbiter of all narratives spread on social networks when it and its key figures themselves have these international connections, including at a governmental level.

But even on a practical level, it is difficult to take seriously the self-attributed role of confronting “fake news.” Returning to the pandemic period, for example, the differentiated treatment given by the company to the Russian Sputnik vaccine and the Pfizer vaccine is noteworthy. The former is treated with suspicion in articles written in August and September 2020, both authored by Jaqueline Sordi (who is also on the staff of the Serrapilheira Institute and a dozen other NGOs funded by Open Society), the latter is defended tooth and nail in dozens of articles by various authors, ranging from insisting that Pfizer’s vaccines are 100% safe for children, to stating that Bill Gates never advocated for reducing the world population.

On this matter, by the way, it is important to emphasize that Itaú coordinates investment portfolios that include Pfizer, therefore, there are business interests that bring the Moreira Salles family and the pharmaceutical giant closer.

But beyond disinformation about Big Pharma, as well as about other places around the world, such as Venezuela, regarding which Lupa claims that María Corina Machado has the popular support of 72% of the Venezuelan population (based on a survey by an institute that is not even Venezuelan, ClearPath Strategies), Lupa seems to have a particular obsession with Russia and, curiously, Lupa’s alignment with the dominant narratives in Western media is absolute.

Lupa argues, for example, that the Bucha Massacre was perpetrated by Russia, using the New York Times as its sole source. Regarding Mariupol, it insists on the narrative of the Russian attack on the maternity hospital and other civilian targets, even mentioning Mariana Vishegirskaya, who now lives in Moscow, has admitted to being a paid actress in a staging organized by the Ukrainian government, and now works in the Social Initiatives Committee of the “Rodina” Foundation. It also denies the attempted genocide in Donbass and the practice of organ trafficking in Ukraine.

An article written by founder Cristina Tardáguila herself relies on the Atlantic Council as a source to accuse Russia of spreading disinformation, one of which would be that Ukraine is a failed state subservient to Europe — two pieces of information that any average geopolitical analyst would calmly confirm.

A particular object of Lupa’s obsession is the Global Fact-Checking Network — of which, by the way, I am a part. It is one of the few international organizations dedicated to fact-checking in a manner independent of ideological constraints, counting among its members a team that is, certainly, much more diverse and multifaceted than the typical “revolving door” of fact-checking agencies in the Atlantic circuit, where everyone studied more or less in the same places, worked in mass media, and were, at some point, funded or received grants from Open Society, the Bill & Melinda Gates Foundation, and/or the US State Department.

Lupa’s criterion for attacking the GFCN is… precisely obedience or not to Western mass media sources, in a circular reasoning that cannot go beyond the argument from authority.

This specific case helps to expose a bit the functioning of these disinformation apparatuses typical of hybrid warfare, which disguise themselves in the cloak of journalistic neutrality to engage in informational warfare in defense of the liberal West.